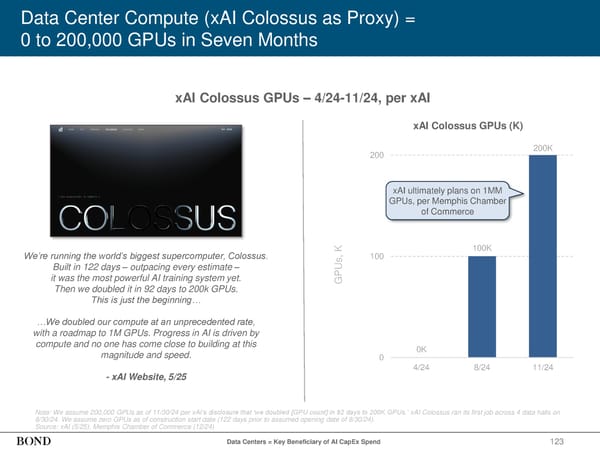

123 Data Center Compute (xAI Colossus as Proxy) = 0 to 200,000 GPUs in Seven Months Data Centers = Key Beneficiary of AI CapEx Spend xAI Colossus GPUs – 4/24-11/24, per xAI Note: We assume 200,000 GPUs as of 11/30/24 per xAI’s disclosure that ‘we doubled [GPU count] in 92 days to 200K GPUs.’ xAI Colossus ran its first job across 4 data halls on 8/30/24. We assume zero GPUs as of construction start date (122 days prior to assumed opening date of 8/30/24). Source: xAI (5/25), Memphis Chamber of Commerce (12/24) We’re running the world’s biggest supercomputer, Colossus. Built in 122 days – outpacing every estimate – it was the most powerful AI training system yet. Then we doubled it in 92 days to 200k GPUs. This is just the beginning… …We doubled our compute at an unprecedented rate, with a roadmap to 1M GPUs. Progress in AI is driven by compute and no one has come close to building at this magnitude and speed. - xAI Website, 5/25 GPUs, K xAI Colossus GPUs (K) 0K 100K 200K 0 100 200 4/24 8/24 11/24 xAI ultimately plans on 1MM GPUs, per Memphis Chamber of Commerce

2025 | Trends in Artificial Intelligence Page 123 Page 125

2025 | Trends in Artificial Intelligence Page 123 Page 125