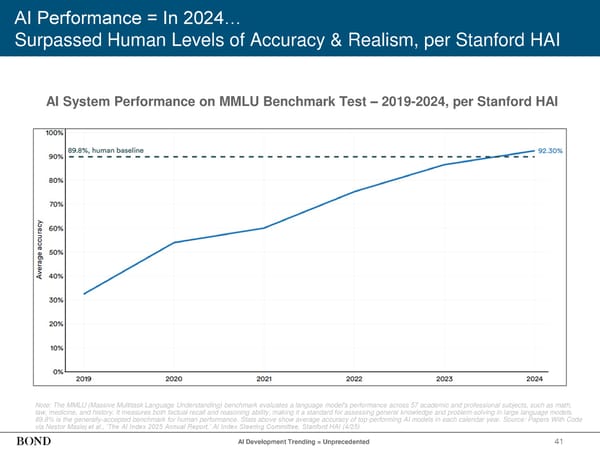

41 AI Performance = In 2024… Surpassed Human Levels of Accuracy & Realism, per Stanford HAI AI System Performance on MMLU Benchmark Test – 2019-2024, per Stanford HAI Note: The MMLU (Massive Multitask Language Understanding) benchmark evaluates a language model's performance across 57 academic and professional subjects, such as math, law, medicine, and history. It measures both factual recall and reasoning ability, making it a standard for assessing general knowledge and problem-solving in large language models. 89.8% is the generally-accepted benchmark for human performance. Stats above show average accuracy of top-performing AI models in each calendar year. Source: Papers With Code via Nestor Maslej et al., ‘The AI Index 2025 Annual Report,’ AI Index Steering Committee, Stanford HAI (4/25) AI Development Trending = Unprecedented

2025 | Trends in Artificial Intelligence Page 41 Page 43

2025 | Trends in Artificial Intelligence Page 41 Page 43